Intro

For this article, we will be running a few tests to determine whether MySQL or MongoDB is better when it comes to the query speed for the IP2Location DB24 data. We have generated some test data with random IP addresses. There are 10 test files containing IP addresses with the first file containing 100,000 IP addresses and subsequent files have 100,000 more than the previous.

Note: You will need a subscription to the DB24 data if you wish to run your own benchmark.

Subscribe here: https://www.ip2location.com/database/ip2location

Once you have a subscription to DB24, you can extract the CSV data file and follow our steps below.

Testing environment

The machine that is being used for the benchmark is an Amazon Web Service EC2 machine of the t2.large instance type so it comes with 2 vCPUs and 8GB RAM. We have 20GB General Purpose SSD (gp2) onboard.

We are using Debian 9 for the OS with PHP 7.0 installed to run the benchmark scripts. Obviously, we also have MySQL 5.7 and MongoDB 4.0.14 since we are comparing these 2 database engines. Both MySQL and MongoDB are installed with default settings because most users will be deploying them that way.

Generate test data script

Below is the PHP script generatetestdata.php that we will use to generate our test data. You can call it on the command line with the number of random IPs you want in the test file.

<?php

$totalip = $argv[1];

$iparr = [];

for ($x = 0; $x < $totalip; ++$x) {

$iparr[] = mt_rand(0, 255) . '.' . mt_rand(0, 255) . '.' . mt_rand(0, 255) . '.' . mt_rand(0, 255);

}

file_put_contents('data-' . $totalip, implode("\n", $iparr));

?>

Usage example below:

php generatetestdata.php 100000

The above command will generate 100,000 random IP addresses and write them to the file called data-100000.

Creating the MySQL database & table

Run the following SQL to create the database and table for our IP2Location DB24 data.

CREATE DATABASE ip2location;

USE ip2location;

CREATE TABLE `ip2location_db24`(

`ip_from` INT(10) UNSIGNED,

`ip_to` INT(10) UNSIGNED,

`country_code` CHAR(2),

`country_name` VARCHAR(64),

`region_name` VARCHAR(128),

`city_name` VARCHAR(128),

`latitude` DOUBLE,

`longitude` DOUBLE,

`zip_code` VARCHAR(30),

`time_zone` VARCHAR(8),

`isp` VARCHAR(256),

`domain` VARCHAR(128),

`net_speed` VARCHAR(8),

`idd_code` VARCHAR(5),

`area_code` VARCHAR(30),

`weather_station_code` VARCHAR(10),

`weather_station_name` VARCHAR(128),

`mcc` VARCHAR(256),

`mnc` VARCHAR(256),

`mobile_brand` VARCHAR(128),

`elevation` INT(10),

`usage_type` VARCHAR(11),

PRIMARY KEY (`ip_to`)

) ENGINE=MyISAM DEFAULT CHARSET=utf8 COLLATE=utf8_bin;

Importing DB24 CSV into MySQL table

Run the SQL below to import data from the CSV file into the MySQL table.

LOAD DATA LOCAL INFILE './IP-COUNTRY-REGION-CITY-LATITUDE-LONGITUDE-ZIPCODE-TIMEZONE-ISP-DOMAIN-NETSPEED-AREACODE-WEATHER-MOBILE-ELEVATION-USAGETYPE.CSV' INTO TABLE `ip2location_db24` FIELDS TERMINATED BY ',' ENCLOSED BY '"' LINES TERMINATED BY '\r\n';

Creating the MongoDB database, collection and importing the DB24 data

Run the following command in Bash to create the database and collection (table equivalent) as well as import the CSV data.

mongoimport -u mongoAdmin -p changeMe --authenticationDatabase admin --drop --db ip2location --collection db24 --type csv --file "./IP-COUNTRY-REGION-CITY-LATITUDE-LONGITUDE-ZIPCODE-TIMEZONE-ISP-DOMAIN-NETSPEED-AREACODE-WEATHER-MOBILE-ELEVATION-USAGETYPE.CSV" --fields ip_from,ip_to,country_code,country_name,region_name,city_name,latitude,longitude,zip_code,time_zone,isp,domain,net_speed,idd_code,area_code,weather_station_code,weather_station_name,mcc,mnc,mobile_brand,elevation,usage_type

Creating the MongoDB index to speed up queries

Create a text file called buildindexipv4 and paste the following codes.

use ip2location

db.db24.ensureIndex({ip_to: 1})

exit

After saving the file, you can run the following in Bash to create the index.

mongo -u mongoAdmin -p changeMe --authenticationDatabase admin < buildindexipv4

Creating the MySQL benchmark script

Create a PHP file called benchmarkmysql.php and paste the following codes.

<?php

// exec('echo 1 > /proc/sys/vm/drop_caches');

exec('service mysql start');

sleep(10);

$pdo = new PDO('mysql:host=localhost;dbname=ip2location;charset=utf8', 'root', '12345');

$pdo->setAttribute(PDO::ATTR_ERRMODE, PDO::ERRMODE_EXCEPTION);

$testfile = $argv[1];

$testdata = file_get_contents($testfile);

$iparr = explode("\n", $testdata);

unset($testdata);

$totalip = count($iparr);

$totaltime = 0;

foreach ($iparr as $myip) {

$ipnum = sprintf("%u", ip2long($myip));

// echo "Testing " . $myip . " (" . $ipnum . ")\n\n";

$start = microtime(true);

$st = $pdo->prepare('select * from `ip2location_db24` where ip_to >= :ipnum limit 1');

$st->bindParam(':ipnum', $ipnum, PDO::PARAM_INT);

$st->execute();

$data = $st->fetchAll(PDO::FETCH_ASSOC);

$end = microtime(true);

$totaltime += ($end - $start);

// var_dump($data);

// echo "==================================================================================================\n";

}

echo "MySQL\n";

echo "==========\n";

echo "Total time for " . $totalip . " IPs: " . round($totaltime, 4) . "secs.\n";

echo "Average time for " . $totalip . " IPs: " . round($totaltime / $totalip, 4) . "secs.\n\n";

echo "Average time for " . $totalip . " IPs: " . round(($totaltime / $totalip) * 1000, 4) . "msecs.\n\n";

exec('service mysql stop');

?>

NOTE: We have suppressed the DB24 data output as we are only interested in the time taken. We are also starting the mysql service before running the script and stopping it when the script is done. This is to make each test run under similar conditions.

Creating the MongoDB benchmark script

Create a PHP file called benchmarkmongo.php and paste the following codes.

<?php

// exec('echo 1 > /proc/sys/vm/drop_caches');

exec('service mongod start');

sleep(10);

require 'vendor/autoload.php';

$user = "mongoAdmin";

$pwd = "changeMe";

$m = new MongoDB\Client("mongodb://${user}:${pwd}@localhost:27017");

// select a database

$db = $m->ip2location;

// select a collection (analogous to a relational database's table)

$collection = $db->db24;

$testfile = $argv[1];

$testdata = file_get_contents($testfile);

$iparr = explode("\n", $testdata);

unset($testdata);

$totalip = count($iparr);

$totaltime = 0;

foreach ($iparr as $myip) {

$ipnum = sprintf("%u", ip2long($myip));

// echo "Testing " . $myip . " (" . $ipnum . ")\n\n";

$start = microtime(true);

// filter those ip_to which are greater than our IP number

$query = array('ip_to' => array('$gte' => (float)$ipnum));

// perform query and return a single result

$data = $collection->findOne($query);

$end = microtime(true);

$totaltime += ($end - $start);

// var_dump($data);

// echo "==================================================================================================\n";

}

echo "MongoDB\n";

echo "==========\n";

echo "Total time for " . $totalip . " IPs: " . round($totaltime, 4) . "secs.\n";

echo "Average time for " . $totalip . " IPs: " . round($totaltime / $totalip, 4) . "secs.\n\n";

echo "Average time for " . $totalip . " IPs: " . round(($totaltime / $totalip) * 1000, 4) . "msecs.\n\n";

exec('service mongod stop');

?>

NOTE: As with the MySQL benchmark script, we have suppressed the DB24 data output as we are only interested in the time taken. We are also starting the mongod service before running the script and stopping it when the script is done. This is to make each test run under similar conditions.

Performing the benchmark

For the benchmark, we will run the MySQL benchmark script against the smallest test file and record the average milliseconds taken per query.

php benchmarkmysql.php <test_data_file>

Next, we will execute the MongoDB benchmark script against the same test file.

php benchmarkmongo.php <test_data_file>

Then we record the average query time. One by one, we will repeat the steps with all remaining test files from the smallest to the largest file.

You might have noticed both benchmark scripts have this line commented out.

exec('echo 1 > /proc/sys/vm/drop_caches');

This line clears the page cache in Debian which could cache files on non-volatile storage. We also ran the whole benchmark again but with this line uncommented in both scripts. By doing so, we can have a better idea how the OS-level caching has affected the performance of both MySQL and MongDB.

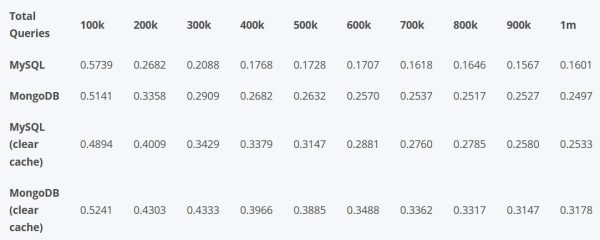

Our benchmark numbers

Below are our average query times in milliseconds.

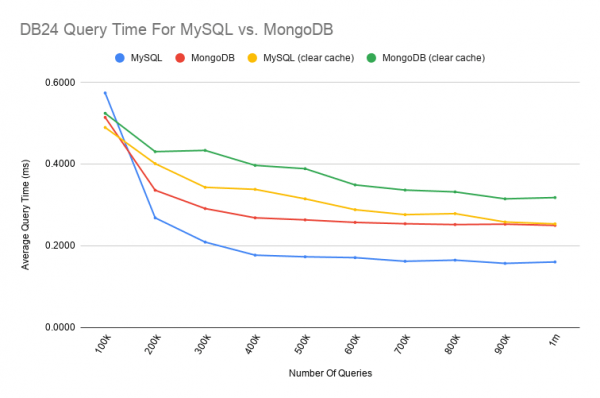

Below, you’ll find a nice colorful line chart to show you the differences. You can easily see which option performed the best when dealing with a large number of queries.

As expected, if you clear the page cache, you will see the time taken is higher. Caching does help both MySQL and MongoDB to speed things up. When both database engines have sub-milliseconds query times, most users won’t need to worry about which one to choose.

Pros & cons of using MySQL vs. MongoDB

If you are dealing with a huge number of queries and you only have a single database server, based on the benchmark numbers, MySQL is the faster option. On the other hand, if you feel that the difference in the query times is minuscule then either database engine should do the job just fine.

Assuming your website grows in popularity, you will find one day that a single database may not cut it. There are limits to how much you can scale up a particular machine. What this means is you need to scale out and have more machines serving your database queries.

MongoDB is great for scaling out but that only helps if your data can be sharded. In the case of the IP2Location DB24, it is not possible to shard so all you can do is to create a replica set with multiple MongoDB servers containing the same dataset.

Essentially, this is no different from having a MySQL replication setup with a master and multiple slaves.

Conclusion

Due to the fact that DB24 is not suitable for sharding, it is better to opt for a MySQL database to host your geolocation data because of the slightly faster query time. Having said that, if your infrastructure is built to accommodate MongoDB, then by all means, use MongoDB to store the DB24.